Spoilers: It’s not elephant power.

A friend of mine in college recently posted the following open question:

There has been a lot of brouhaha recently about “there are X times the number of jobs in ‘green energy’ than in coal.” Relating to the number of jobs, can someone tell me how many megawatts/gigawatts are produced on a per capita basis in each of these energy “sectors?”

My initial though was that this was really a loaded question, a fallacious argument that ‘green energy’ wasn’t as efficient because the cost to build out new capacity (often referred to as Capital Expenditure, or CAPEX) far exceeded the operating expenditures (OPEX) required to maintain power generation from existing plants. Of course coal plants are less labor and cost intensive, they already exist! I also knew from my previous research on this subject that overall costs of solar plants were approaching parity with traditional coal plants. My initial instinct was to pour cold water on the whole argument[i].

However, before I hit send, I thought about the question a bit more. I recalled what I knew about oil refiners in the US, and how building new capacity usually costs much more than simply buying or expanding existing facilities, and thus no new refineries have been built in decades. Maybe my friend was right, and reports of cost parity of green energy sources relied too heavily on a fallacy of their own, mistaking the alternative not as new coal plants, but rather maintenance and expansion of existing units.

I left a comment saying this might be something that would be interesting to look into. I assume Facebook’s algorithm took note of my reply because it then put a comment made by another of my friends five days earlier on the top of my feed:

It’s amazing to me that the solar industry flaunts its terrible productivity as a selling point. “We produce the least terawatt-hours using the most workers!” That’s not a benefit!

That comment was issued with a link to a Fast Company article trumpeting that in the US, solar energy now provides twice as many jobs as coal.

I do agree with both of my friends that claiming certain investments create jobs is dubious, and I would rather focus on the cost of each alternative in dollar terms to make sure these investments are economically sustainable or at least approaching sustainability to make sure any jobs they create do not suddenly vanish once political winds change or subsidies expire. For that reason, understanding how close green energy is to competing to legacy energy sources economically is the exercise I took on here. Note that when I say energy in the scope of this post, I am referring to electric power generation and not discussing fuels directly used for personal transport.

The Data

The first data point I wanted to explore was something that was said in the aforementioned Fast Company article, which stated:

While 40 coal plants were retired in the U.S. in 2016, and no new coal plants were built, the solar industry broke records for new installations, with 14,000 megawatts of new installed power.

If my hypothesis was that existing coal capacity would be more competitive than newly-built capacity, the fact that 40 existing coal plants were shut down with no new ones built would seem to indicate that this is not true. However, following the oil refinery model where refineries have been closing for decades without replacements being built, this could also just mean the lost capacity was being offset by increases in production in other units. Following the article’s source for the statement led me back to the US Energy Information Administration (EIA), a reputable government source which I have used multiple times for other pieces on this blog. The EIA provides tons of data as well as projections, both of which can be used to infer how different technologies will compete for utilization now and in the future.

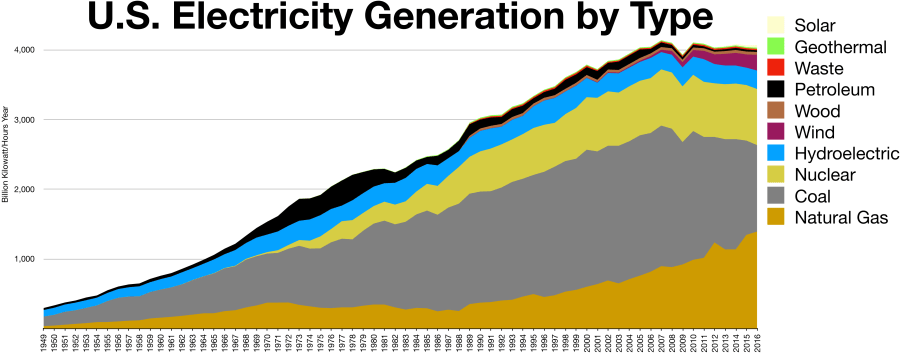

The EIA provides monthly spreadsheets tracking almost all US power generators with some exceptions, and each one appears to contain details for all individual US power generation plants with capacities over 1 MW[ii] as well as planned plant retirement times. There are 20,870 plants listed in the “operating” tab of the nearly 7 megabyte Excel spreadsheet that in all have 1,183,011 MW of total listed nameplate capacity.[iii]

By filtering the data for the most recent spreadsheet from March 2017, I can see that plants representing 26,614 MW of capacity have planned retirement dates between March 2017 and December 2021. Only 215.5 MW of this retired capacity represents ‘green energy’, and of this 215.5, 207.6 is the result of the planned retirement of some of the capacity of the Wanapum hydroelectric plant in Washington State, which has been in operation since 1963, although a quick Google search makes it appear that this capacity is actually going to be replaced and expanded. In terms of capacity, most of the retirements affect coal (12,163 MW), Natural Gas (9,320 MW), and Petroleum Liquids (778 MW) facilities.

While the total capacity being retired between now and the end of 2021 is very low in terms of total capacity, replacing this with renewable resources would account for over two-thirds of the EIA’s current projected green energy growth between 2017 (207,200 MW) and 2021 (244,630 MW)[iv]. The “Planned” plant tab of the EIA generator spreadsheet backs this up, with the 113,698 MW of planned capacity to be started up between now and 2027 much more heavily tilted towards green (or at least “Carbon-free”) energy sources than the existing energy mix. These plants include wind (22,570 MW, 19.9%), solar (9949 MW, 8.8%), nuclear (5,000 MW, 4.4%), as well as hydroelectric and geothermal combining for an additional 997 MW (0.9%).

This is good and bad news for the future of green energy. On one hand, it would appear to support my position that there is significant growth available for renewables to compete with other sources where new infrastructure is required. The downside of this is that following the EIA projections through 2050 only gives a total green energy capacity of 433,490 MW, well under 5% of the total electrical generation asset mix.

The Alternative

You might have noticed that the “Carbon-free” energy sources only represent about a third of the total planned capacity to be added. But there are no coal mines replacing the ones being shut down. Instead, almost two-thirds of the planned new plants utilize Natural Gas (72,659 MW, 63.9%).

If green energy has a real threat going forward, natural gas is a…uh, erm…natural choice[v]. In terms of price per unit energy, Natural gas has cost only a fraction of what oil does, even when oil prices crashed[vi]. The current price of Natural Gas is approximately $3/million BTU, and a barrel of oil contains about 5.8 million BTU, making the cost of Natural gas approximately $17.4 per barrel of oil equivalent (BOE).

From an environmental perspective, Natural gas is cleaner than coal and produces about half the amount of CO2 per unit energy produced. This is primarily because Natural gas has a higher heating value per unit weight of fuel than coal (Coal heating value is generally between 7,000 and 14,000 BTU/lb, while Natural gas is up to 21,500 BTU/lb). In fact, the US’s aggressive shifting to Natural gas-based electricity generation was cited by many as a reason the Paris climate accord was unfair to the US, as we had already reduced our Carbon emissions quite a bit because of technological advances that allowed the US to replace chunks of coal power with Natural gas:

As I indicated in my comments yesterday, and the president emphasized in his speech, this — this administration and the country as a whole — we have taken significant steps to reduce our CO2 footprint to levels of the pre-1990s.

What you won’t hear — how did we achieve that? Largely because of technology, hydraulic fracturing and horizontal drilling, that has allowed a conversion to and natural gas and the generation of electricity. You won’t hear that from the environmental left. –EPA Head Scott Pruitt, June 2nd, 2017

I don’t want to wander back into climate change again (although that is and will continue to be a recurring theme here), but I do bring this up because when judging renewable energy on its cost merits, I believe too much emphasis has been placed on coal and not enough on Natural gas.

And the Winner Is?

So, if you think the EIA has been pretty informative about this whole topic, you’re right. In fact, they basically have the answer to the cost question already spelled out, but that wouldn’t have made for a great discussion. Here’s what EIA says the CAPEX and OPEX numbers say for Natural gas compared to the most cost-effective wind and solar options (for those of you that did not bother to click the link above to see the raw data, EIA did note that these are unsubsidized costs based in 2016 dollars). The CAPEX and OPEX numbers are what I pulled from EIA, while the hypothetical CAPX and OPEX were calculated in Excel based on a theoretical 100 MW plant.

First up, conventional fired combined cycle Natural gas:

| Natural Gas (Fired Combined Cycle) |

Conventional |

Advanced |

Natural Gas (Advanced with Carbon Capture and Sequestration) |

| CAPEX ($/kW) |

969 |

1013 |

2153 |

| OPEX (Fixed, $/kW-yr) |

10.93 |

9.94 |

33.21 |

| OPEX (Variable, $/MW-hr) |

3.48 |

1.99 |

7.08 |

| Hypothetical CAPEX, 100 MW Plant |

$96,900,000 |

$101,300,000 |

$215,300,000 |

| Hypothetical Annual OPEX, 100 MW Plant |

$4,141,480 |

$2,737,240 |

$9,523,080 |

Fired Natural Gas Turbine (this is what we use on the FPSO where I work)[vii]:

| Natural Gas (Fired Combustion Turbine) |

Conventional |

Advanced |

| CAPEX ($/kW) |

1092 |

672 |

| OPEX (Fixed, $/kW-yr) |

17.39 |

6.76 |

| OPEX (Variable, $/MW-hr) |

3.48 |

10.63 |

| Hypothetical CAPEX, 100 MW Plant |

$109,200,000 |

$67,200,000 |

| Hypothetical Annual OPEX, 100 MW Plant |

$4,787,480 |

$9,987,880 |

Finally, Wind and Solar:

| Wind, Solar |

Solar (Photovoltaic) |

Wind (Onshore) |

| CAPEX ($/kW) |

2277 |

1686 |

| OPEX (Fixed, $/kW-yr) |

21.66 |

46.71 |

| OPEX (Variable, $/MW-hr) |

0 |

0 |

| Hypothetical CAPEX, 100 MW Plant |

$227,700,000 |

$168,600,000 |

| Hypothetical Annual OPEX, 100 MW Plant |

$2,166,000 |

$4,671,000 |

Based on the EIA numbers, the cheapest option by far is advanced or conventional Natural gas plants. However, if you include carbon capture and sequestration (CCS) costs, wind and solar would seem to come out on top (although solar only marginally so on the strength of its much lower OPEX in that scenario).

There is a significant cost differential created by the need for CCS that shifts the equation. However, given that even using Natural gas without CCS does significantly cut overall CO2 emissions when replacing coal facilities, there is still some environmental driver to employ that technology even without CCS, as a “stepping stone” to environmentally friendlier power generation.

For those looking for a talking point against Natural gas, from a long-term environmental viewpoint, the amount that cheap Natural gas will stall efforts to install permanent green energy solutions could stall and by some estimations could eventually leave us in a worse position than we are currently. Also, cutting our carbon footprint in half is great, but if a country like India increased their per capita electricity usage to even a quarter of the US using Natural gas than the net impact would be an increase in CO2 emissions[viii]. It may seem ironic that our great achievement in cutting CO2 emissions through the installation of Natural gas fired generation facilities would result in absolutely massive overall increases if replicated throughout the world, but that is a natural consequence of living in a fully developed country with energy demands an order of magnitude higher than that of the developing world.

In any event, EIA projects based on our current path that even the application both of these technologies will not result in a dramatic shift CO2 emissions by 2050, with or without adherence to the Obama Administration’s Clean Power Plan (CPP). The US per-capita carbon footprint will fall from its current 16.3 metric tons/year to either 12.7 (22.1% reduction) with CPP or 14.0 (14.1% reduction) without it.

I won’t be researching the projected external costs and consequences of climate change in this article, but I can state with confidence that investment in Carbon-neutral energy will have to accelerate at a much faster pace if we plan to effectively mitigate them. Of course, as is the case with emerging technologies, I’m not sure what green energy might look like in 5 or 10 years. I used to think that green energy was a great idea but an investment with costs an order of magnitude higher than conventional fuels. This isn’t the case, and if there are even a few marginal breakthroughs left to be found the field, the situation could easily be flipped on its head, with Carbon on the losing end. I don’t know how/if/or when this might happen, but I may take that on as a separate entry later.

How Does This Money Support Jobs?

Going back to the original question in this post, CAPEX money is generally a one-time cost for construction of a plant. As noted, this is very labor-intensive and why the solar and wind energy companies can boast about how many jobs they create compared to coal. Variable OPEX costs generally refer to fuel, which is why these are 0 for wind and solar. However, money paid for Natural gas will directly support jobs in the Natural gas industry. Fixed OPEX costs are more likely to include maintenance and repairs, which also require skilled labor.

For me, while I concur with my second friend that from an economic standpoint it’s generally better focus first on the per-capita value from the jobs you create than the quantity, I don’t necessarily agree that the idea that the only thing we get in this case is energy. Without an honest discussion about how to quantify the costs of externalities associated with CO2 emissions, we can’t really pass judgement.

Thanks for making it to the end. I didn’t make it easy this time, only one safari picture/sight gag (I may add some later if anyone has any ideas). As always, let me know what you think, especially if you think I screwed something up.

[ix]

[i] It’s amazing how often I, and everyone else, mistake gasoline for ‘cold water’ when trying to end an internet argument. I’ll talk about that more in another post.

[ii] Although the notes on the file claim “Capacity from facilities with a total generator nameplate capacity less than 1 MW are excluded from this report. This exclusion may represent a signifciant portion of capacity for some technologies such as solar photovoltaic generation,” there are some plants with a capacity of <1 MW in the list. Also, the word “significant” is misspelled in the report and this seems a suitable forum to issue a public service reminder that Excel doesn’t spell check cell text by default.

[iii] As mentioned in a previous blog post, US electricity consumption is 3,913,000,000,000 kW-hr/year, which converts to 446,689 MW on a continuous basis. It seems important to note that plant nameplate capacity is generally the highest designed usage, generally whatever the highest anticipated peak usage for the facility, which will be much higher than the average use.

[iv] From a separate projection provided by the EIA. You can find theire energy projections here: https://www.eia.gov/analysis/projection-data.cfm#annualproj

[v] I can’t tell you how much I hate myself for this joke. Oddly, I can’t seem to make myself remove it.

[vi] US Natural gas has cost is currently about $3/Million BTU between 1 and 11 dollars per thousand cubic feet for decades (https://www.eia.gov/totalenergy/data/browser/?tbl=T09.10#/?f=M), and about 5800 cubic feet equal a barrel of oil equivalent (BOE). This puts the range of prices in BOE as approximately $5.80 to $63.80, well below the

[vii] EIA did not include estimated costs if Carbon Capture and Sequestration were to be applied to Gas Turbines. I’m not certain whether this is due to a lack of data or technical limitation that prevents CCS from being applied to gas turbines (I can’t think of one but if anyone knows this please let me know).

[viii] From the IEA (different than EIA), US per-capita electricity consumption is 13 MWh/capita compared to 0.8 for India. India also has 3 times the population of the US. Therefore if the US cuts the carbon footprint of electricity generation by a factor of two through the use of Natural gas, India could wipe out all of those gains by installing the exact same plants “more environmentally friendly” plants in order to lift their per capita energy consumption to 3 MWh/capita, less than a quarter of the US per capita demand. This is why I find it dishonest to claim that countries like India aren’t doing their fair share in multi-national climate accords that show their total emissions rising while countries like the US decrease.

[ix] I’ll get back to this in a later post. I’ve already written too much.